Docker with WSL2

Docker is a platform that enables the containerization of applications. Containerization allows you to package an application and its dependencies into a single, “portable” unit called a container. This ensures that the application runs consistently across different computing environments (Linux, Windows, MacoS, etc). Docker is widely used by software developers and system administrators to simplify deployment, scaling, and management of applications. Containers share the hosts kernel which means a Linux image (for eg., Ubuntu) can’t run on a Windows/MacOS kernel. Docker Desktop will supply a Linux kernel via WSL2 (or Hyper-V on older machines)

For example, you can use Docker to run databases like MongoDB, web servers like Nginx, or even large language models (LLMs) such as Ollama, all in isolated containers (see the diagram below). This makes it easy to test, develop, and deploy software without worrying about conflicts between dependencies or differences in operating systems.

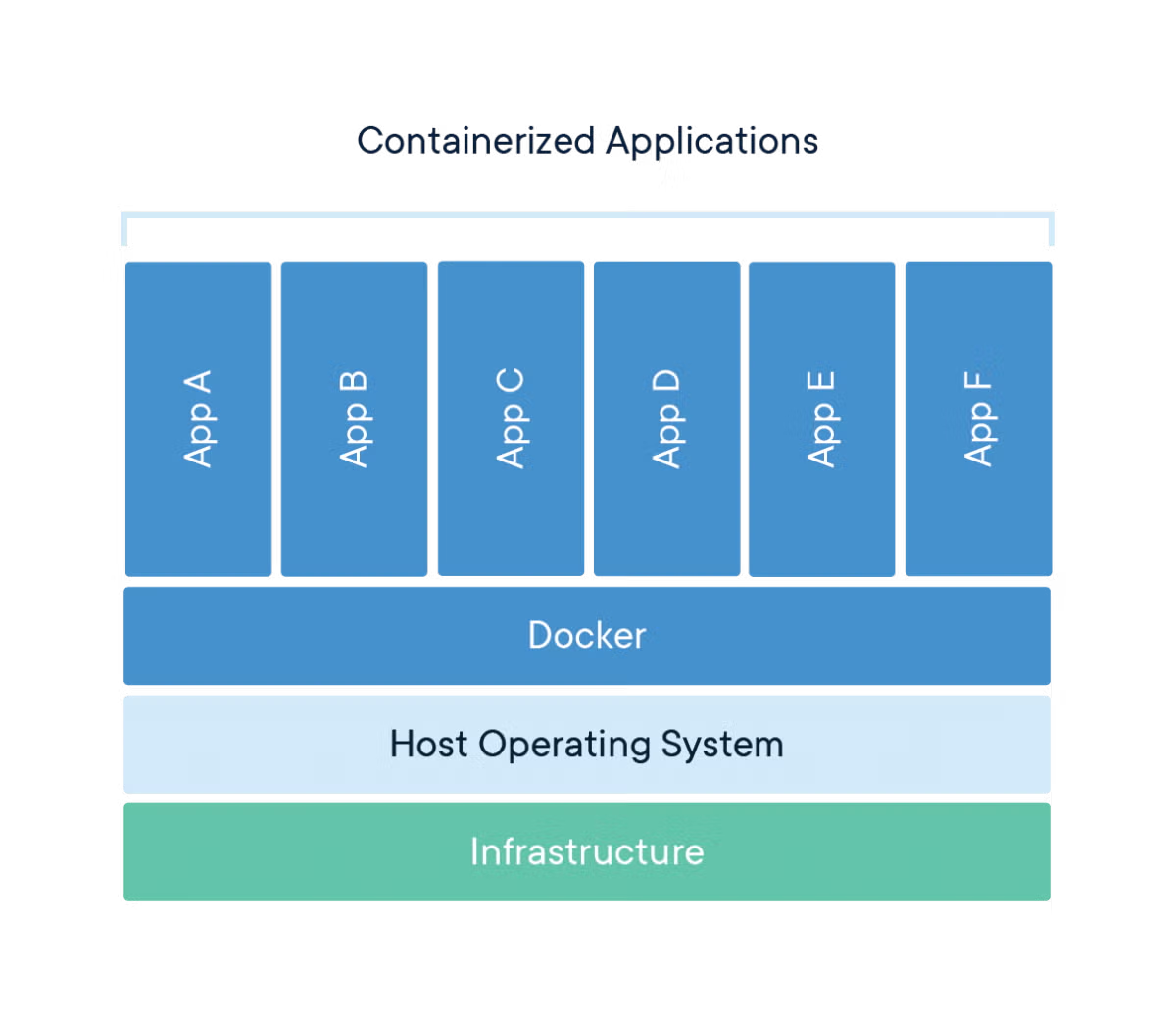

In the image below, you can see how Docker containers abstract the underlying infrastructure. Each application (Apps A-F) runs inside its own container, sharing the same operating system kernel but remaining isolated from each other. This isolation improves security and resource management, while also making it easy to run multiple applications on the same host.

Docker is good for:

- Ensuring consistent environments across development, testing, and production

- Simplifying application deployment and scaling

- Running multiple applications or services on a single host without conflicts

- Rapidly prototyping and testing new software

- Isolating applications for security and resource control

With Docker and WSL2 (Windows Subsystem for Linux 2), Windows users can run Linux containers natively, making it easier to develop and deploy cross-platform applications.

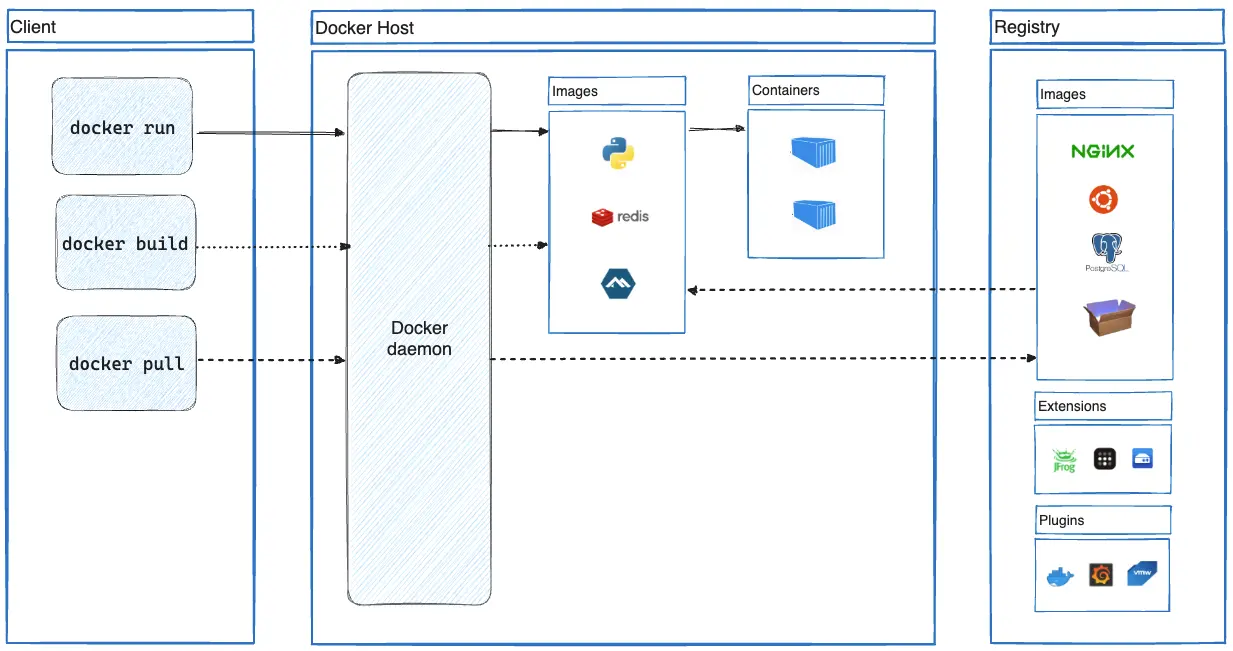

I was curious about how Docker really functions behind the scenes, so I (started doing some digging)[https://docs.docker.com/get-started/docker-overview/] and learned more about the underlying process. Docker is written in the Go programming language, and when we install the application we’re installing a pre-compiled binary written/compiled in Go with Electron as the front-end with some C++ helpers for low-level OS interactions. There’s more to learn about this process but it’s less relevant to Docker and more-so software engineering deployment. Go Docker uses a client-server architecture where the Docker client (CLI/Docker Desktop) communicates with a Docker daemon. The daemon is what enables us to use most of the functionality offered by Docker (build/run containers). The communication between client and daemon occur via a Representation State Transfer (REST) API over UNIX sockets or a network interface (since you can have a client connect to a remote daemon).

The way the Docker application functions (at least on Windows) is that when a user (such as myself) installs the executable, it’ll install various different components and combine them together. The daemon process (dockerd) didn’t appear in my Windows task manager but that appears to be normal. Docker Desktop creates a hidden Linux distribution (since it runs on WSL2) and launches the Linux build inside it, Windows seems to only see the entire Linux distro as a VM so we won’t be able to see those processes. As seen in the above image, Docker uses regestries which stores images. Docker Hub is a public registry anyone can use but development teams can also create their own private registries & images.

As for what images are, they’re just read-only templates/files that contain instructions on creating a Docker container. A lot of images are based on other images with customizations (different parameters, etc). Containers are running instances of images (similar to processes being a program in execution). We can interact with containers via Docker API, GUI, or CLI. A container is typically isolated from other containers, but you can change that.